In the realm of server performance, it is widely acknowledged that both CPUs and GPUs play integral roles. However, there is often confusion surrounding the question of which component is more suitable for specific equipment.

While CPUs and GPUs are indeed distinct server processors, they also possess certain areas of collaboration. Today, we shall delve into the dissimilarities between server CPUs and GPUs.

A Comprehensive Analysis of Server CPUs and GPUs

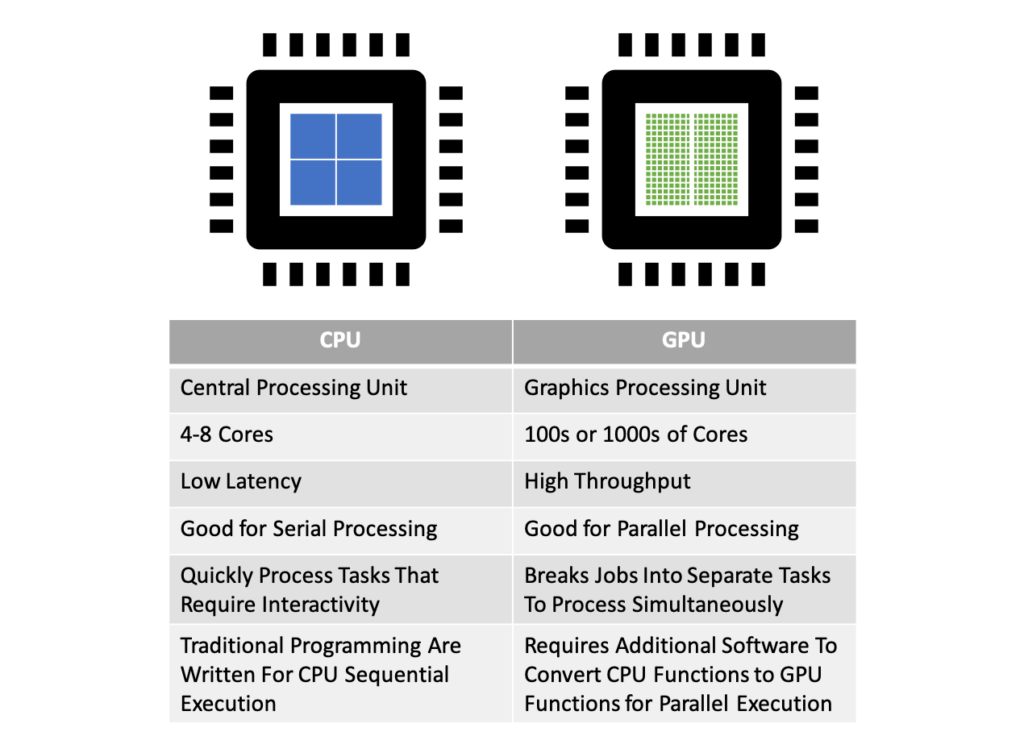

With millions of transistors comprising their structure, CPUs are indispensable components in modern systems, responsible for executing commands and carrying out the necessary processes for computers, servers, and operating systems.

CPUs excel in a wide range of workloads, particularly those necessitating low latency and optimal performance per core. In a dedicated server, one, two, or even four CPUs are typically deployed to handle fundamental operating system processing. Functioning as powerful execution engines, CPUs primarily concentrate a relatively small number of cores on a single task for processing.

On the other hand, GPUs diverge from server CPUs as they are processors consisting of smaller, specialized cores capable of simultaneously processing tasks across multiple cores.

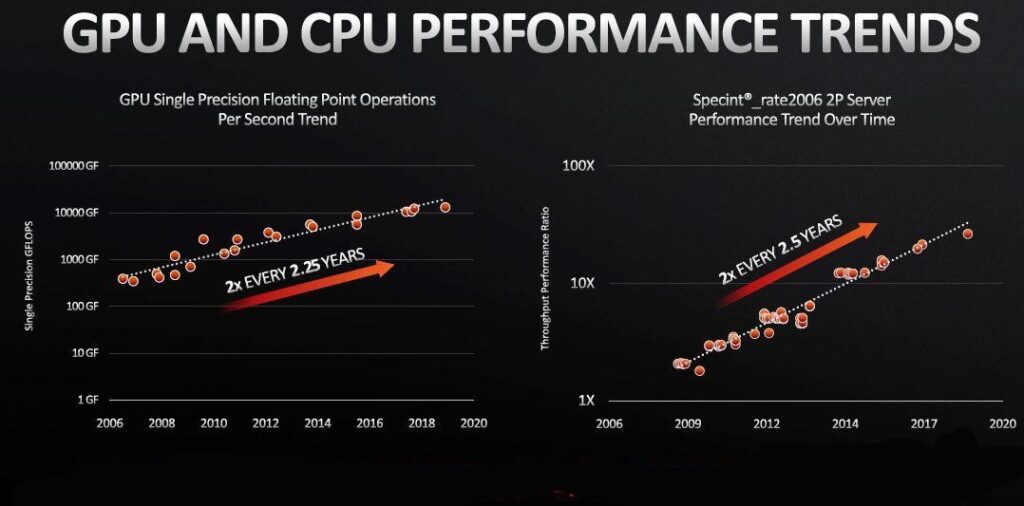

Consequently, GPUs deliver robust image processing and parallel processing capabilities to servers. Although GPUs may exhibit lower clock speeds compared to contemporary CPUs, their strength lies in the dense arrangement of cores on the chip. Originally developed for gaming purposes, GPUs have broadened their applications to encompass various fields such as AI and high-performance servers.

Applications in Servers

While GPUs may fall short of CPUs in terms of processing performance per individual computing unit, their ability to leverage a substantial number of computing units concurrently grants them superior performance when faced with high-density computing tasks. In essence, CPUs excel in coordinating intricate overall operations, whereas GPUs shine in executing simple operations on extensive datasets.

Server CPUs and GPUs differ not only in processing capabilities but also in the breadth and depth of their applications. Although GPUs exhibit more extensive applicability than CPUs, it would be inaccurate to definitively declare one superior to the other. In fact, there are scenarios where these two components can effectively collaborate.

The synergy between CPUs and GPUs yields enhanced data throughput and concurrent computation within applications. The collaborative principle involves running the main program on the CPU while the GPU complements the CPU architecture by enabling concurrent execution of repetitive computations within the application.

To draw an analogy, the CPU serves as the task manager of the entire system, coordinating comprehensive computing tasks, while the GPU specializes in executing finer, dedicated tasks. In comparison to CPUs, GPUs leverage parallel computing performance to accomplish a greater volume of work within the same timeframe. Servers equipped with both CPUs and GPUs exhibit superior computing performance and data throughput, thereby significantly improving data processing efficiency.

Learn more: how NVIDIA’s GPU Powers Up Cloud Server

Is GPU More Critical Than CPU in Servers?

To comprehend the significance of CPUs and GPUs, it is essential to consider their respective application characteristics.

A GPU server refers to a server equipped with a graphics card capable of simultaneously executing thousands of parallel threads. As Internet networks advance, an increasing number of high-performance servers incorporate GPUs into their infrastructure, reflecting the profound advantages GPUs offer in terms of multi-processing performance. This has enhanced data transmission efficiency and yielded higher returns on investment for enterprises.

Despite the improved performance facilitated by server GPUs, CPUs remain crucial as indispensable server components. Whether it be a high-performance server, a standard server, or a computer, the CPU’s presence is irreplaceable. Server CPUs adeptly handle complex tasks while orchestrating the overall system. Notably, they oversee database queries and data processing operations.

Why Doesn’t GPU Operate the Operating System Independently?

The GPU does have certain limitations when it comes to running the operating system independently. One major limitation lies in the fact that all the cores within a GPU can only process the same operation simultaneously, known as SIMD (Single Instruction Multiple Data).

This means that if you have a task involving 1,000 similar computations, such as cracking a password hash, the GPU can divide each instruction into different threads and compute them across its cores. However, if the CPU and graphics card were used for kernel operations like writing files to disk or controlling system state, the performance would be significantly slower.

CPU Use Cases

CPUs prove beneficial for tasks that necessitate sequential algorithms or involve intricate statistical computations:

- Real-time inference and machine learning (ML) algorithms that are not easily parallelizable.

- Inference and training of recommender systems with memory-intensive embedding layers.

- Models that involve large-scale data samples, like 3D data for inference and training.

GPU Use Cases

GPUs are ideally suited for parallel processing and are the go-to choice for training AI models in most scenarios.

Enterprises generally gravitate towards GPUs due to their ability to handle parallel processing of multiple calculations. Examples of GPU use cases include:

- Neural networks.

- Natural language processing (NLP)

- Accelerated AI and deep learning operations that involve massive parallel processing of data inputs.

- Gaming

- High-performance computing (HPC)

Let your cloud server harness the full power of dedicated GPU