How to configure Load Balancers for Cloud Servers

Introduction

Layerstack’s Load Balancers service is a robust, scalable load balancing solution built upon our secure and distributed global network, it can maximize the capabilities of your applications by distributing traffic among multiple cloud servers regionally and globally. In this guide, we will outline the various features and configurations of the Load Balancers service.

Algorithms

We have load balancing algorithms for a wide range of use cases, including:

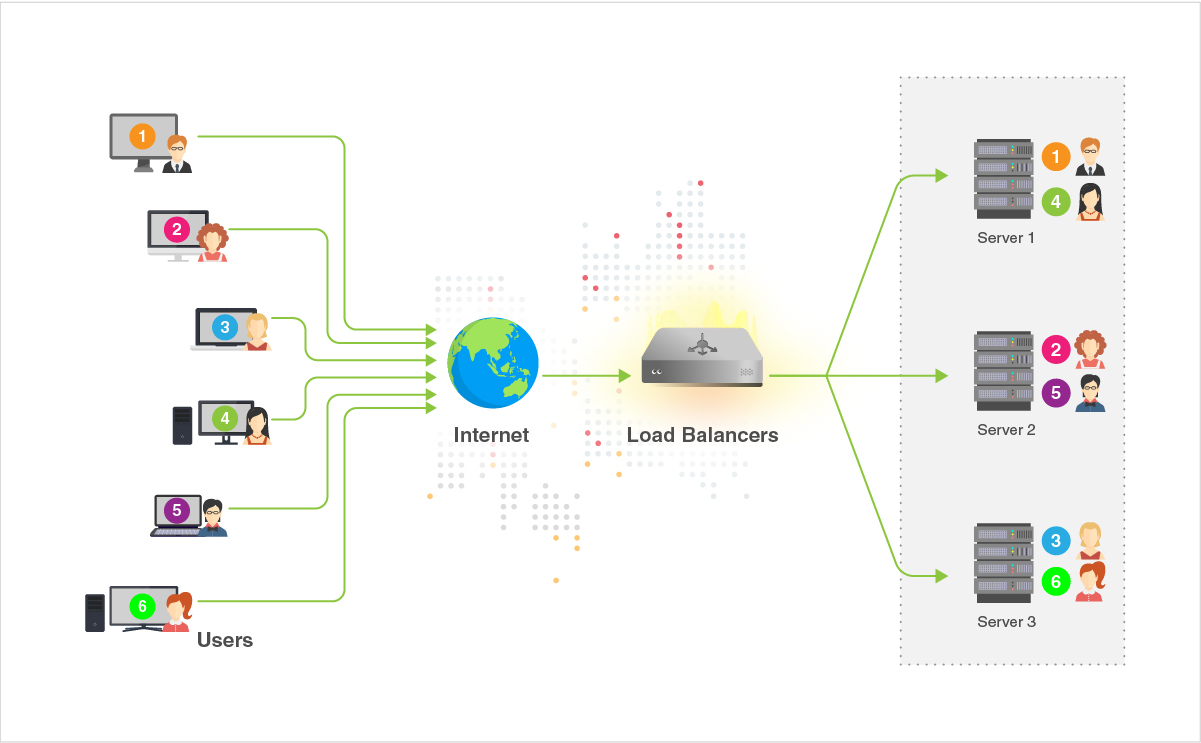

Round Robin: A standard technique that loops over your nodes to distribute requests evenly.

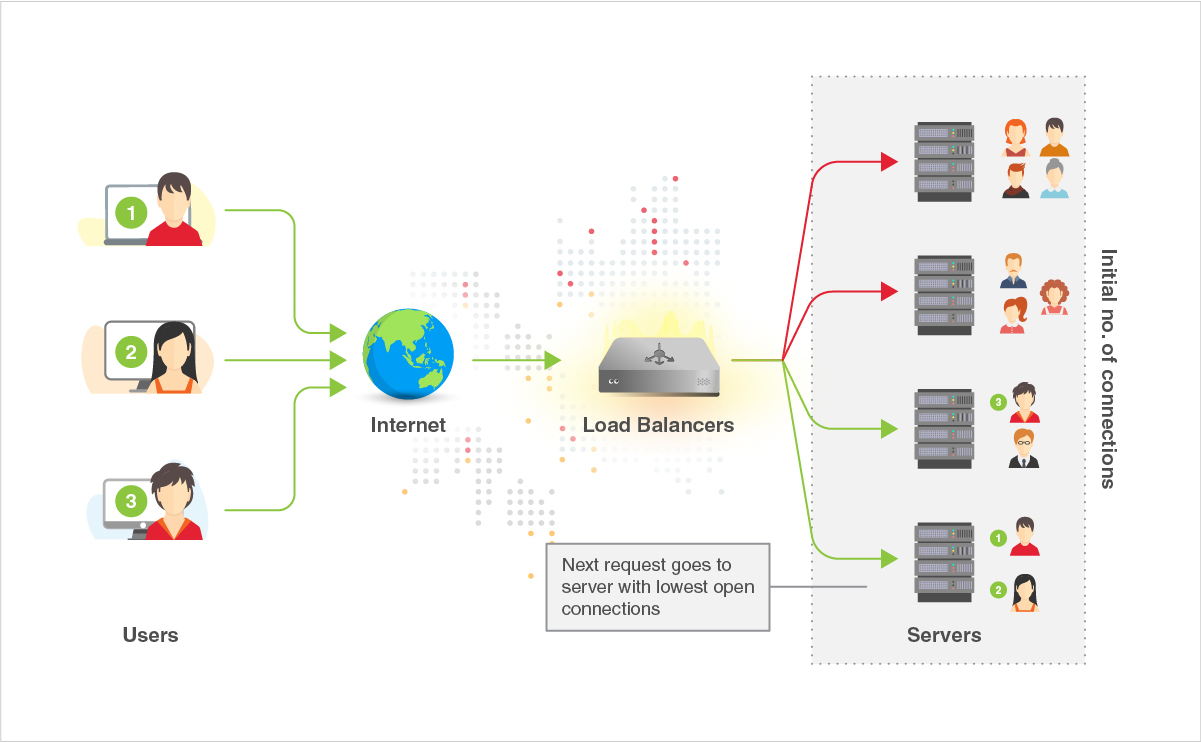

Least Connections: An intelligent distribution algorithm that directs requests to the least utilized nodes.

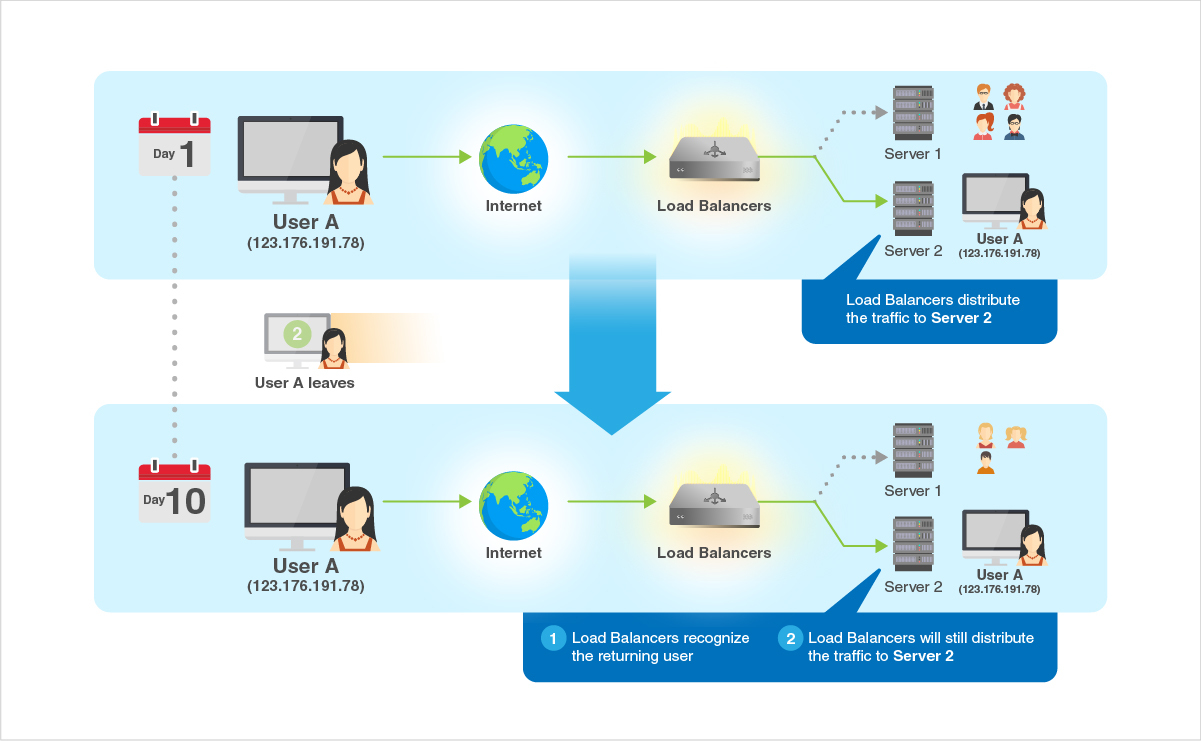

Source: A method of distribution that pairs users with nodes, allowing their requests to be served from the same node each time.

Use Cases

Load balancers serve a multitude of purposes. Here are some examples of where our Load Balancers service can help:

Application Resilience: By having a pool of backend servers sitting behind a single load balancers/endpoint, user requests are distributed across multiple servers. In doing so, the impact of a server failure is significantly reduced. Combining this with our active and passive health checks brings further resilience and automated fault response.

Scalability: Backend servers that sit behind our Load Balancers service can be dynamically added and removed, facilitating seamless scaling of your applications.

HTTPS / SSL: By offloading HTTPS / SSL encryption to our load balancers, application deployments are far simpler. No longer is it required to distribute private SSL certificates to each backend server - a task that becomes increasingly difficult when scaling of servers is taken into account. Now, you can manage a single SSL certificate at a single point in the infrastructure, detached from application deployment.

Features

Our Load Balancers service supports an array of features that make deploying your applications simple and secure, including:

DDoS Protection

DDoS, or distributed denial of service, is a form of cyberattack that overloads applications with traffic, often resulting in your applications being unable to serve genuine customers.

Our Load Balancing service is capable of mitigating DDoS attacks of up to 20Gbps, allowing you to rest easy while we keep your applications safe and secure.

Global Private Networking

Our Global Private Networking option allows you to extend the benefits of our Load Balancing service to your private network and its endpoints.

TCP / HTTP(S) Protocols

Depending on your requirements, you may want to handle network traffic differently for your applications. For example, you may want to off-load SSL encryption from your applications and instead have our load balancers manage that for you.

Our Load Balancers service is capable of functioning as:

- A TCP Layer 3 network load balancers, providing you with complete control of the network packets.

- A HTTP(S) web application native load balancers, extending the capabilities of our load balancers for your HTTP applications.

Sticky Sessions

Similar to the Source algorithm, sticky sessions allow users to be continuously served by the same backend server. However, unlike the Source algorithm which operates based on user IP, sticky sessions function with application cookies.

This provides your application with greater control over how your users’ requests are served by your backend servers and persist for the lifetime of the cookie.

Sticky sessions can be helpful in ensuring session data remains on one server instead of across multiple and can make for more efficient use of server resources. However, it can also hinder scalability as requests cannot be distributed evenly across your server pool, and session data may be lost if the relevant server goes down.

TCP / HTTP Health Checks

By supporting multiple health check protocols, our Load Balancers service can determine the health and readiness of your application either via:

TCP Connection: By attempting a TCP connection on a given port, your application’s health can be determined based upon whether the connection was established or failed.

HTTP Status / Body: By executing HTTP requests to your application on a given port and URL, your application’s health can be determined based upon whether the HTTP status or response body matches the desired outcome.

Our health checks also work in two modes:

Active: Health checks that periodically check the health of your nodes using a specified request, such as a particular URL or port.

Passive: Health checks that observe how your nodes are responding to genuine connections and determine the health of your node even before the active check is able to report it as unhealthy.

Configuration

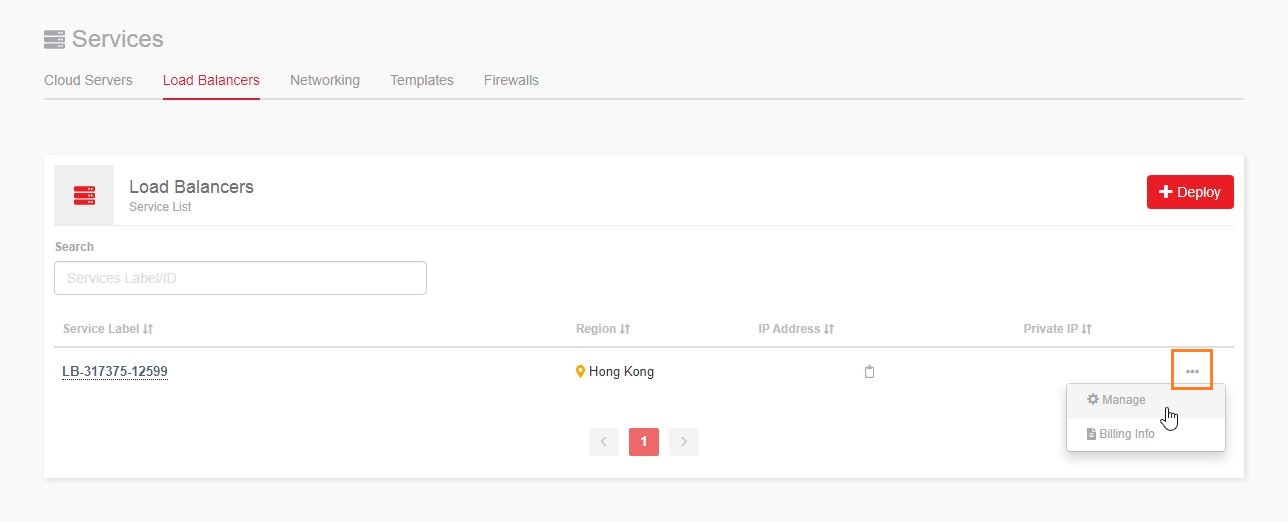

Load balancers can be provisioned and configured from the main dashboard of LayerPanel under Services > Load Balancers. Existing load balancers can be managed by selecting the ... drop-down menu to the right of each load balancer.

Settings

Load balancers configuration is managed with the following options:

Port: The port on which your load balancers will listen to requests, such as 443 for HTTPS or 80 for HTTP.

Protocol: Determines which protocol the load balancers will use to listen for requests, and can be configured as:

TCP: For TCP connections.

HTTP: For HTTP web applications.

HTTPS: For HTTP web applications that require SSL encryption.

Algorithm: Determines the method of request distribution.

Sticky Sessions: Enables or disables the use of sticky sessions. Set this to HTTP Cookie to enable.

Active Health Checks: Stipulates how the backend server is determined to be healthy or unhealthy. Passive health checks can be enabled here via the Enable Passive checks checkbox.

Backend Servers: Here is where the upstream nodes that serve your traffic are specified. They require the following configurations:

Server Label: The server name (selected by drop-down) of the server.

Port: The port on which the server is listening and where the load balancers should route traffic to.

Weight: The priority of which a server has in serving requests.